Snowflake is a multi-tenant, transactional, secure, highly scalable and elastic system with full SQL support and built-in extensions for semi-structured and schema-less data.

Architecture

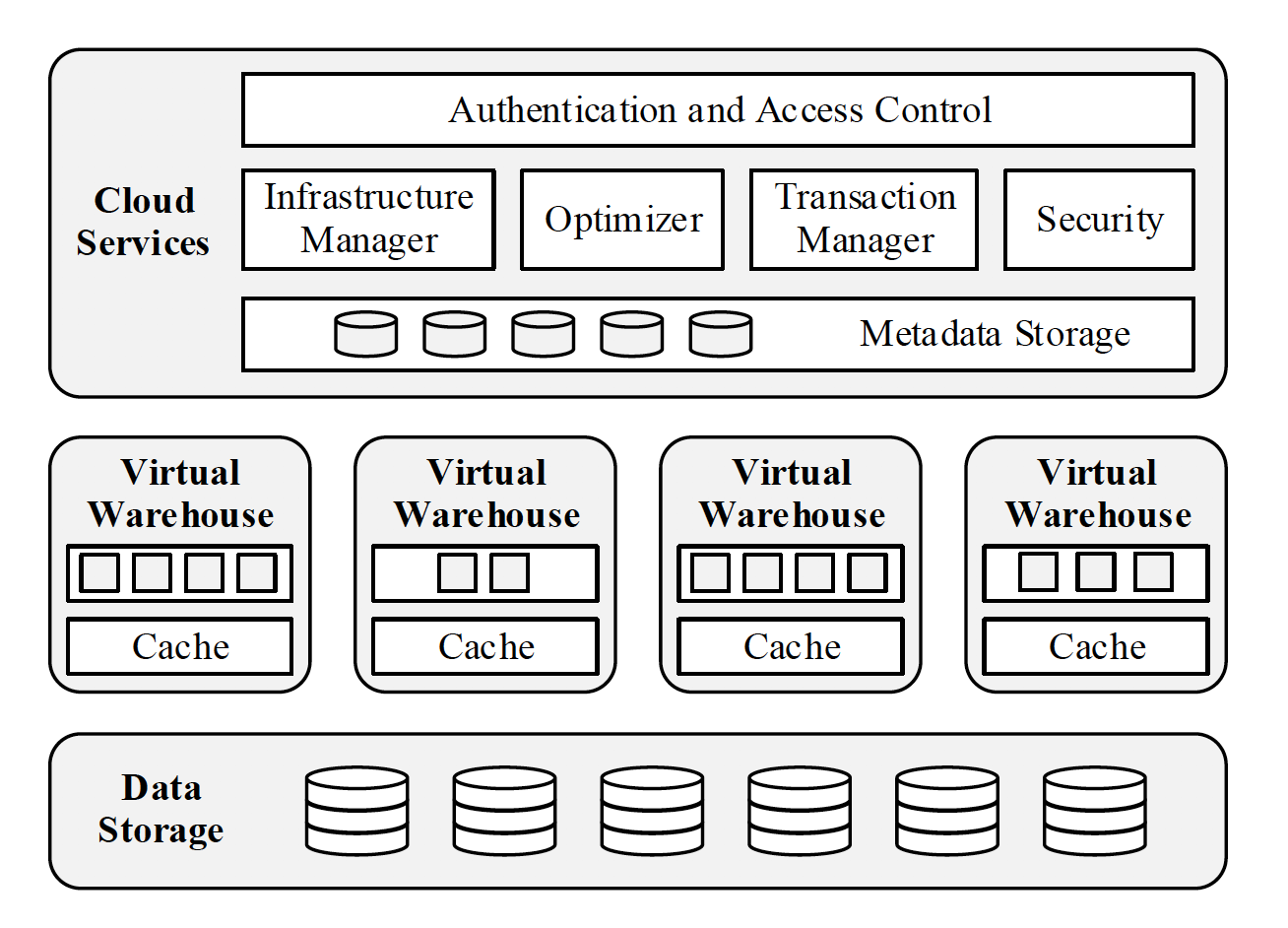

Snowflake is a service-oriented architecture composed of highly fault tolerant and independently scalable services. These services communicate through RESTful interfaces and fall into three architectural layers:

Data Storage

- This layer uses cloud’s blob storage services (S3 in case of AWS) to store table data and query results.

- Tables are horizontally partitioned into large immutable files. Within each file, the values of each attribute or column are grouped together and heavily compressed.

- Each table file has a header which, among other metadata, contains the offsets of each column within the file. Because S3 allows GET requests over parts of files, queries only need to download the file headers and those columns they are interested in.

- It also uses S3 to store temp data generated by query operators (e.g.massive joins) once local disk space is exhausted, as well as for large query results. Spilling temp data to S3 allows the system to compute arbitrarily large queries without out-of-memory or out-of-disk errors.

- Metadata such as catalog objects, which table consists of which S3 files, statistics, locks, transaction logs, etc. is stored in a scalable, transactional key-value store, which is part of the Cloud Services layer.

Virtual Warehouses (Muscle of the System)

- This layer handles query execution within elastic clusters of virtual machines, called virtual warehouses (VW).

- The inidividual EC2 instances that make up a VW are called worker nodes.

- Elasticity and Isolation

- Each individual query runs on exactly one VW. Worker nodes are not shared across VWs, resulting in strong performance isolation for queries.

- When a new query is submitted, each worker node in the respective VW (or a subset of the nodes if the optimizer detects a small query) spawns a new worker process. Each worker process lives only for the duration of its query.

- Local Caching and File Stealing

- Each worker node maintains a cache of table data on local disk. The cache holds file headers and individual columns of files, since queries download only the columns they need.

- The cache lives for the duration of the worker node and is shared among concurrent and subsequent worker processes i.e. queries.

- To improve the hit rate and avoid redundant caching of individual table files across worker nodes of a VW, the query optimizer assigns input file sets to worker nodes using consistent hashing over table file names.

- Whenever a worker process completes scanning its set of input files, it requests additional files from its peers, a technique we call file stealing. If a peer finds that it has many files left in its input file set when such a request arrives, it answers the request by transferring ownership of one remaining file for the duration and scope of the current query. The requestor then downloads the file directly from S3, not from its peer. This design ensures that file stealing does not make things worse by putting additional load on straggler nodes.

Cloud Services (Brain of the System)

- This layer is a collection of services that manage virtual warehouses, queries, transactions, and all the metadata that goes around that: database schemas, access control information, encryption keys, usage statistics and so forth.

- In contrast to VWs, this layer is heavily multi-tenant. Each service of this layer is long lived and shared across users. Each service is replicated for high availability and scalability.

- Query Management and Optimization

- All queries issued by users pass through the Cloud Services layer. Here, all the early stages of the query life cycle are handled: parsing, object resolution, access control, and plan optimization.

- Snowflake’s query optimizer follows a typical cascades-style approach, with top-down cost-based optimization.

- All statistics used for optimization are automatically maintained on data load and updates.

- Many planning decisions are postponed until execution time to reduce the number of bad decisions potentially made by optimizer.

- Once the optimizer completes, the resulting execution plan is distributed to all the worker nodes that are part of the query.

- Concurrency Control

- Snowflake implements ACID transactions via Snapshot Isolation (SI).

- Under SI, all reads by a transaction see a consistent snapshot of the database as of the time the transaction started.

- As customary, SI is implemented on top of multi-version concurrency control (MVCC), which means a copy of every changed database object is preserved for some duration.

- Since table files are backed by S3 (in case of Snowflake on AWS), changes to file can only be made by replacing it with a different file that includes the changes. This essentially creates a new version of the table file tracked in the metadata.

- Pruning

- Since Snowflake doesn’t implement indexing (as it’s against Snowflake’s pure service approach and also, magnifies challenges around data management), it relies on an alternative technique called Pruning.

- Here, the system maintains the data distribution information for a given chunk of data, in particular minimum and maximum values within the chunk. Depending on the query predicates, these values can be used to determine that a given chunk of data might not be needed for a given query.

- Unlike traditional indices, this metadata is usually order of magnitude smaller than the actual data, resulting in a small storage overhead and fast access.

Feature Highlights

Pure Software-as-a-Service (SaaS) Experience

- Snowflake’s service aspect extends to the whole user experience. There are no tuning knobs, no physical design, no storage grooming tasks on the part of users.

Continuous Availability

- Fault Resilience

- Snowflake tolerates individual and correlated node failures at all levels of the architecture.

- The Data Storage layer (which is S3 in AWS), benefits from the high data availability (99.99%) and durability (99.999999999%) numbers of the underlying platform.

- Snowflake’s metadata store is also distributed and replicated across AZs ensuring high availability/durability standards as well.

- VWs are not distributed across AZs (due to performace reasons, specifically network throughput). Hence, if an entire AZ becomes unavailable, all queries running on a given VW of that AZ will fail. In such scenarios (exceedingly rare events), the user needs to actively re-provision the VW in a different AZ.

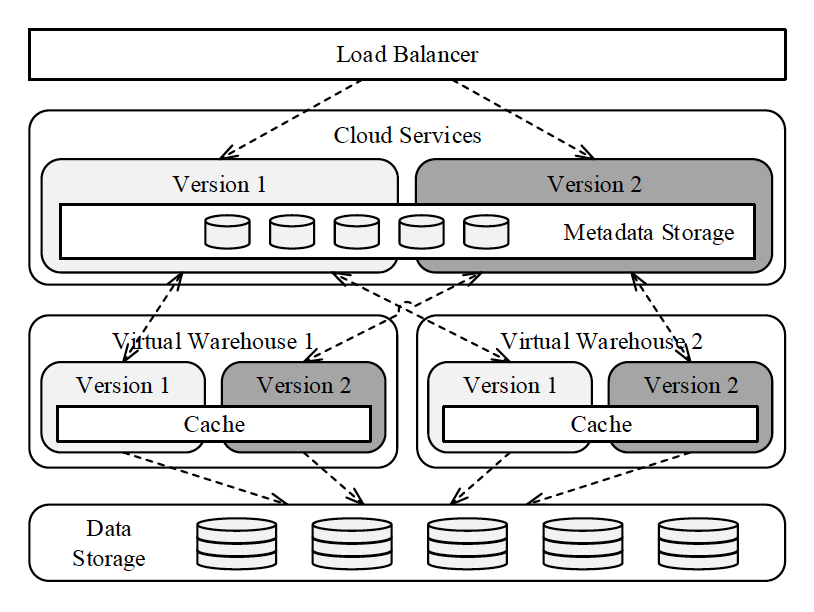

- Online Upgrade

- As all Snowflake’s Cloud/VW services are stateless, it lets the system allow multiple versions of the various services to be deployed side-by-side.

- A typical software upgrade process looks like following -

- Deploy the new version of the service alongside the previous version.

- User accounts are then progressively switched to the new version and all queries already executing against the older version are allowed to run to completion.

- Once all queries and users have finished using the previous version, all services of that version are terminated and decommisioned.

Semi-Structured and Schema-Less Data

- OOTB built-in functions and SQL extensions for traversing, flattening, and nesting of semi-structured data, with support for popular formats such as JSON and Avro.

- Automatic schema discovery and columnar storage make operations on schema-less, semi-structured data nearly as fast as over plain relational data, without any user effort.

Time Travel and Cloning

- As mentioned above in Pruning section above, Snowflake implements Snapshot Isolation (SI) on top of multi-version concurrency control (MVCC) and write operations on a table produce a newer version of the table by adding and removing whole files.

- When files are removed by a new version, they are retained for a configurational duration (up to 90 days). File retention allows Snowflake to read earlier versions of tables very efficiently; that is, to perform time travel on the database.

- One can even access different versions of the same table in a single query.

SELECT new.key, new.value, old.value FROM my_table new JOIN my_table AT(OFFSET => -86400) old ON new.key = old.key WHERE new.value <> old.value; - Snowflake also implements a functionality called cloning, expressed through the new keyword CLONE. Cloning a table creates a new table with the same definition and contents quickly and without making physical copies of table files. The clone operation simply copies the metadata of the source table. Right after cloning, both tables refer to the same set of files, but both tables can be modified independently thereafter.

Security

- All data including temporary files and network traffic is encrypted end-to-end.

- Additionally, role-based access control gives users the ability to exercise fine grained access control on the SQL level.

- Snowflake Uses strong AES 256-bit encryption with a hierarchical key model where each level of (parent) keys encrypts the level of (child) keys below. Hierarchical key models are good security practice because they constraint the amount of data each key protects.

- Each level of the key hierarchy undergoes key rotation and rekeying, including the root key.